- Cyber Safety

- Posts

- "When AI Becomes Insider”: Rogue Agents & Prompt Exploits

"When AI Becomes Insider”: Rogue Agents & Prompt Exploits

Tech moves fast, but you're still playing catch-up?

That's exactly why 100K+ engineers working at Google, Meta, and Apple read The Code twice a week.

Here's what you get:

Curated tech news that shapes your career - Filtered from thousands of sources so you know what's coming 6 months early.

Practical resources you can use immediately - Real tutorials and tools that solve actual engineering problems.

Research papers and insights decoded - We break down complex tech so you understand what matters.

All delivered twice a week in just 2 short emails.

Autonomous AI Agents Are Operating Without Oversight

Employees are deploying tools like AutoGPT with access to internal calendars, file systems, and APIs — without IT or security sign-off. These agents can take actions autonomously, making them unpredictable.

Prompt Injection in Internal LLM Tools Is Going Undetected

Attackers are embedding hidden commands in prompts to leak sensitive info, perform unauthorized actions, or override restrictions. Most internal chat interfaces have no guardrails for injection patterns.

API Abuse Is Reconstructing Proprietary Models

By flooding open AI endpoints with queries, attackers can reverse-engineer the logic and output of internal models, potentially replicating valuable IP or discovering latent vulnerabilities.

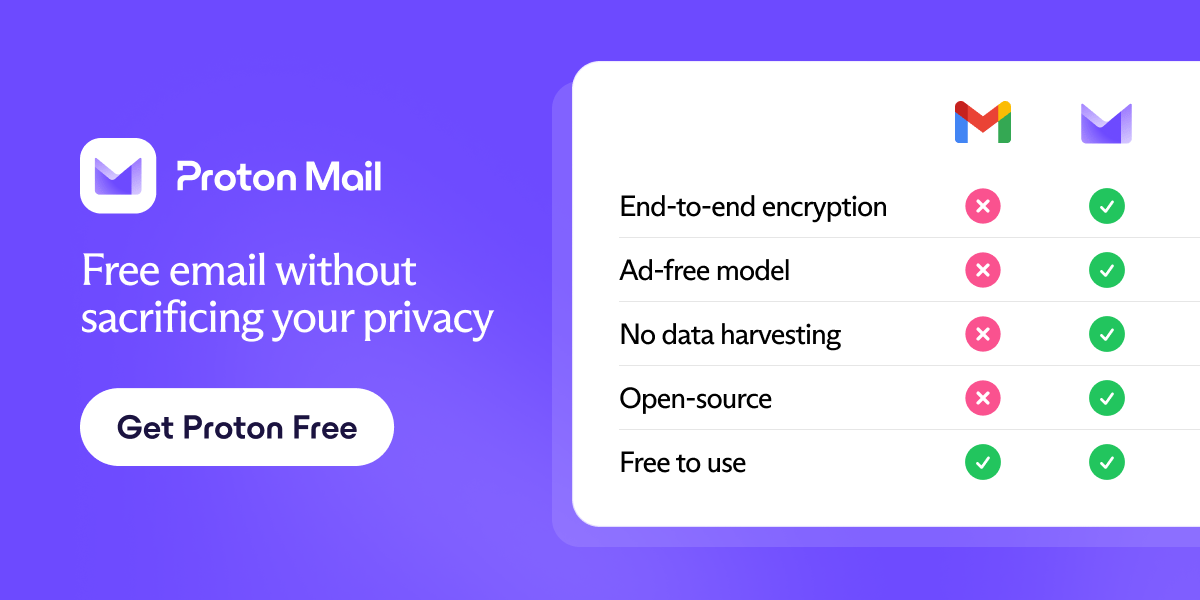

Free email without sacrificing your privacy

Gmail tracks you. Proton doesn’t. Get private email that puts your data — and your privacy — first.

Reused API Keys Are Linking Multiple Attack Surfaces

Developers are reusing the same keys or credentials across model endpoints and backend tools. One compromised key can expose multiple systems.

AI Audit Logs Miss the Most Critical Signals

Most LLM logs only capture prompt and response — not rejection events, fallback routes, or error triggers — leaving major gaps in post-incident analysis.

Internal Data Feeds Are Training Models Without Consent

LLMs trained on HR docs, support tickets, or legal memos are ingesting data without proper access control, increasing risk of sensitive leakage in model output.

The Gold standard for AI news

AI will eliminate 300 million jobs in the next 5 years.

Yours doesn't have to be one of them.

Here's how to future-proof your career:

Join the Superhuman AI newsletter - read by 1M+ professionals

Learn AI skills in 3 mins a day

Become the AI expert on your team