- Cyber Safety

- Posts

- “Model Integrity: Poisoning, Inversion & Inference Risks”

“Model Integrity: Poisoning, Inversion & Inference Risks”

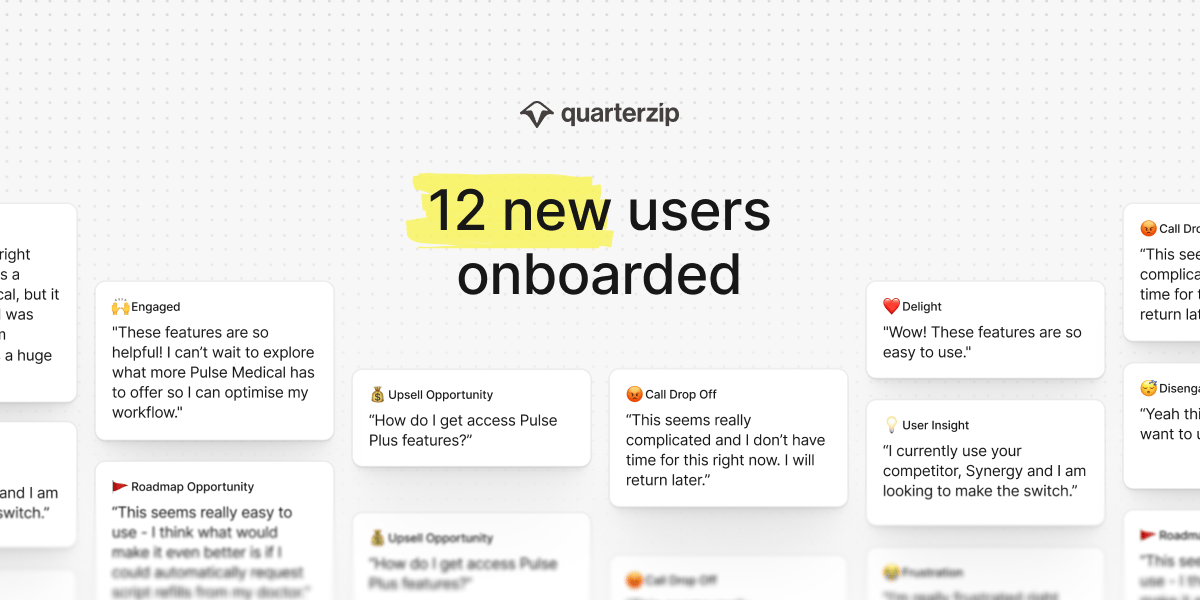

Personalized Onboarding for Every User

Quarterzip makes user onboarding seamless and adaptive. No code required.

✨ Analytics and insights track onboarding progress, sentiment, and revenue opportunities

✨ Branding and personalization match the assistant’s look, tone, and language to your brand.

✨ Guardrails keep things accurate with smooth handoffs if needed

Onboarding that’s personalized, measurable, and built to grow with you.

Training Data Poisoning Alters AI Model Behavior Subtly

Attackers inject malicious records into datasets used to train internal models. These poisoned entries shift outputs in dangerous or biased directions. The impact remains undetected until it's embedded deeply.

Model Inversion Can Reveal Sensitive Training Data

By carefully probing a deployed model, attackers reconstruct details from its training data. This can include names, passwords, or medical records. Even anonymized datasets are at risk.

Inference Attacks Target What the Model Has Learned

Threat actors analyze AI outputs to deduce patterns and user-specific knowledge. These attacks compromise privacy without needing system access. They’re especially dangerous for chatbots trained on internal data.

Free, private email that puts your privacy first

Proton Mail’s free plan keeps your inbox private and secure—no ads, no data mining. Built by privacy experts, it gives you real protection with no strings attached.

Internal AI Systems Often Lack Access Controls

Many companies deploy models without limiting who can prompt them. Sensitive data can be extracted accidentally or intentionally. There’s no logging or filtering of prompt history.

Security Teams Lack Tools to Validate AI Outputs

Unlike code or infrastructure, model logic is opaque to auditors. Poisoning or bias may persist undetected across multiple versions. Teams need new tools for AI-specific validation and testing.

Open-Source Models Used Without Trust Validation

Developers download pre-trained models from GitHub or Hugging Face with no vetting. These may contain backdoors or malicious weights. AI supply chain risk is real — and spreading fast.

An espresso shot for your brain

The problem with most business news? It’s too long, too boring, and way too complicated.

Morning Brew fixes all three. In five minutes or less, you’ll catch up on the business, finance, and tech stories that actually matter—written with clarity and just enough humor to keep things interesting.

It’s quick. It’s free. And it’s how over 4 million professionals start their day. Signing up takes less than 15 seconds—and if you’d rather stick with dense, jargon-packed business news, you can always unsubscribe.